NVM Express and NVM Express over Fabrics

The storage devices are usually tape drives or hard drives, and the SCSI protocol is used for communication with them. The hard drives are slow mechanical devices with spinning parts, plates, and magnetic heads for reading and writing. This makes access to the stored data slow. That’s why in the architecture of the computer there is a cascading approach as to how the data is read and fed into the CPU. RAM is volatile memory, which means that the content of this memory will disappear when it is without power. RAM is significantly faster than the hard drives. It is managed by the CPU, and it is used to read chunks of data from hard drives and to keep it there for the consumption by the CPU. RAM is very fast, but the CPU is still faster; that’s why there are additional layers of memory called cache memory, which read from the RAM and pass it in the direction of the CPU. Thus, the data feed is optimized, but still the characteristics of the hard drive affect the performance of the computers.

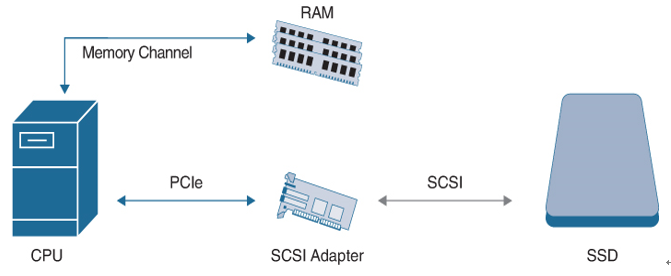

Solid-state drives (SSDs) were developed later. These are hard drives, but instead of being electromechanical devices with spinning components, they are based on flash memory. The SSDs are much faster because they are just like RAM, but they are considered a nonvolatile memory, which means that the data will be preserved even after the power is off. At the beginning, SSDs, which replaced HDDs, used the SCSI protocol for communication. This meant that there had to be a SCSI controller to manage them and also to communicate with the CPU, and the same message-based communication was still in place. Figure 10-18 illustrates the SCSI SSD communication and compares it to the communication between the CPU and the RAM.

Figure 10-18 SCSI SSD

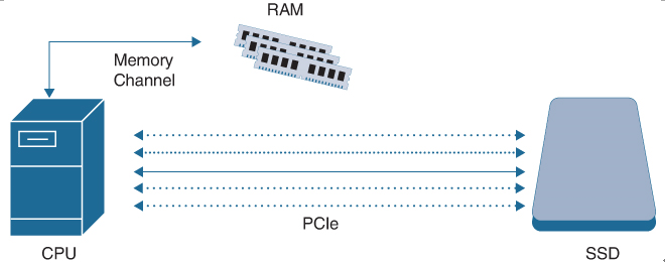

To overcome this challenge, the Non-Volatile Memory Express (NVMe) was developed, also known as the Non-Volatile Memory Host Controller Interface Specification (NVMHCIS). The NVMe is a standard or specification that defines how the non-volatile media (NAND flash memory, in the form of SSDs and M.2 cards) can be accessed over the PCIe bus using memory semantics—said in a different way, how the flash memory storage to be treated as memory, just like the RAM, and the SCSI controller and protocol can become obsolete. Figure 10-19 illustrates the NVMe concept.

Figure 10-19 NVMe Concept

NVMe has been designed from the ground up and brings significant advantages:

- Very low latency.

- Larger number of queues for commands communication (65,535 queues).

- Each queue supports 65,535 commands.

- Simpler command set (the CPU talks natively to the storage).

- No adapter needed.

For the sake of the comparison, with the SCSI protocol and a SATA controller, there is support for one queue and up to 32 commands per queue. With the SSD there are 65535 queues each supporting 65535 commands.. This shows the huge difference and impact of the NVMe as a technology, especially in the data center, where the constantly increasing workloads demand an increase in the resources, including the storage devices and the communication with them.

Cisco Unified Computing System (UCS) C-series rack and B-series blade servers support NVMe-capable flash storage, which is best suited for applications requiring ultra-high performance and very low latency. This is achieved by using non-oversubscribed PCIe lanes as a connection between the multicore CPUs in the servers and the NVMe flash storage, bringing the storage as close as possible to the CPU.

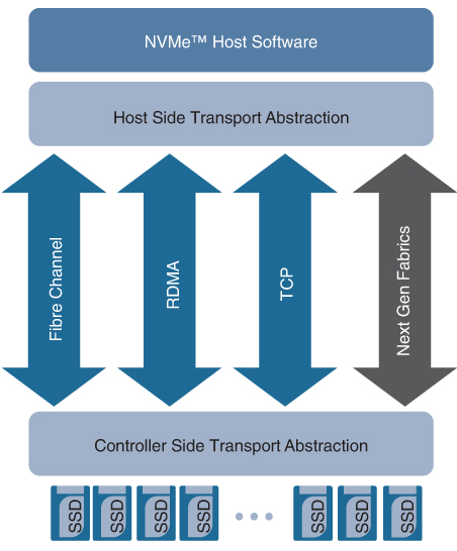

The NVMe brings a huge improvement in the performance of the local storage, but in the data centers the data is stored on storage systems, as it needs to be accessed by different applications running on different servers. The adoption of SSDs as a technology also changed the DC storage systems. Now there are storage systems whose drives are only SSDs, also called all-flash arrays. For such storage systems to be able to communicate with the servers and to bring the benefits of the flash-based storage, Non-Volatile Memory Express over Fabrics (NVMe-oF) was developed. It specifies how a transport protocol can be used to extend the NVMe communication between a host and a storage system over a network. The first specification of this kind was NVMe over Fibre Channel (FC-NVMe) from 2017. Since then, the supported transport protocols for the NVMe-oF are as follows (see Figure 10-20):

Figure 10-20 NVMe Concept

- Fibre Channel (FC-NVMe)

- TCP (NVMe/TCP)

- Ethernet/RDMA over Converged Ethernet (RoCE)

- InfiniBand (NVMe/IB)

The NVMe-oF uses the same idea as iSCSI, where the SCSI commands are encapsulated in IP packets and TCP is the transport protocol, or Fibre Channel, where FCP is used to transport the SCSI commands.

Fibre Channel is the preferred transport protocol for connecting all-flash arrays in the data centers because of its secure, fast, scalable, and plug-and-play architecture. FC-NVMe offers the best of Fibre Channel and NVMe. You get the improved performance of NVMe along with the flexibility and scalability of the shared storage architecture.

FC-NVMe is supported by the Cisco MDS switches. Here are some of the advantages:

- Multiprotocol support: NVMe and SCSI are supported over FC, FCoE, and FCIP.

- No hardware changes: No hardware changes are needed to support NVMe, just a software upgrade of NX-OS.

- Superior architecture: Cisco MDS 9700 Series switches have superior architecture that can help customers build their mission-critical data centers. Their fully redundant components, non-oversubscribed and nonblocking architecture, automatic isolation of failure domains, and exceptional capability to detect and automatically recover from SAN congestion are a few of the top attributes that make these switches the best choice for high-demand storage infrastructures that support NVMe-capable workloads.

- Integrated storage traffic visibility and analytics: The 32Gbps products in the Cisco MDS 9000 family of switches offer Cisco SAN Telemetry Streaming, which can be combined with the FC-NVMe traffic with just a nondisruptive software-only upgrade.

- Strong ecosystem: Cisco UCS C-series rack servers with Broadcom (Emulex) and Cavium (QLogic) HBAs.